tViewport() Ĭonst tree = await page._nd('Page.getResourceTree') įor (const resource of ameTree.

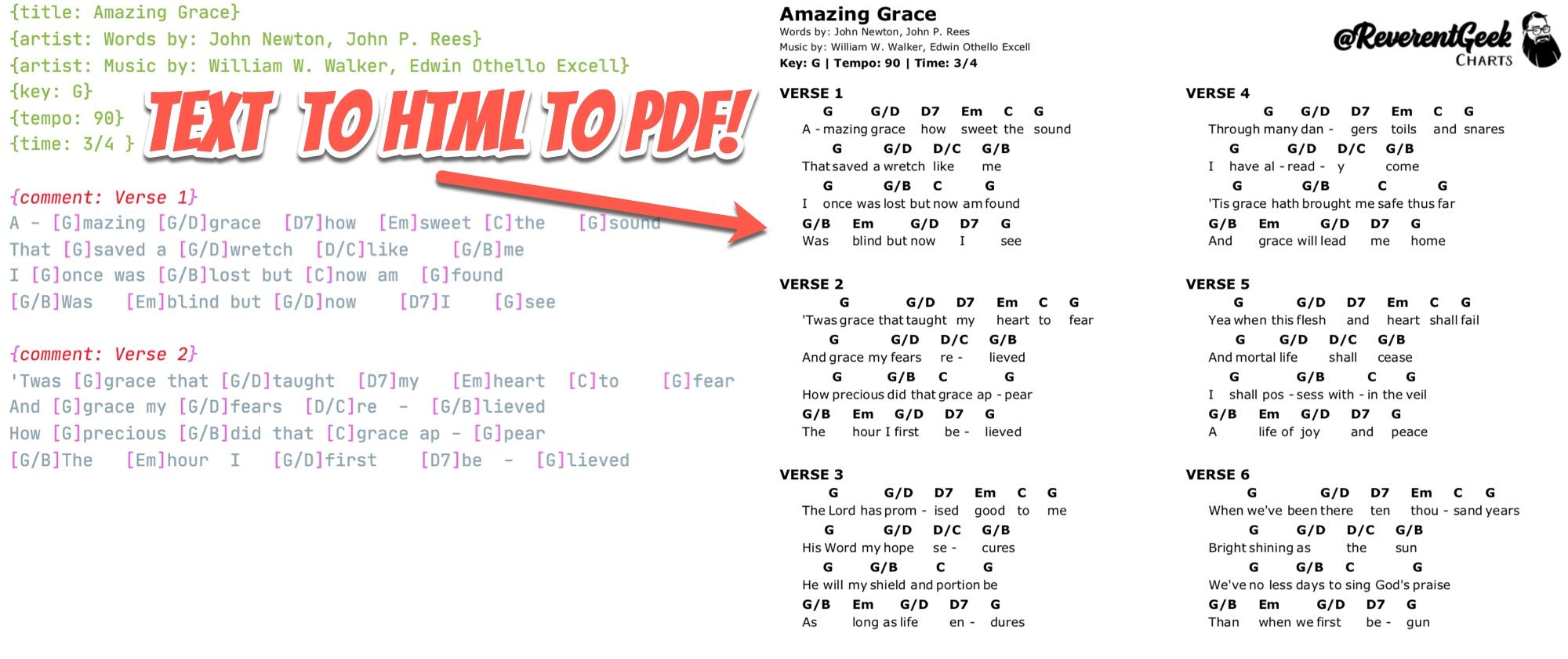

const puppeteer = require('puppeteer') Ĭonst browser = await puppeteer.launch() Let’s see what a script that visits this page and takes a screenshot of the Intoli logo looks like. Only after clicking and going through some JS code, the download initiates in a normal browser. The last line will download and configure a copy of Chromium to be used by Puppeteer. This seems the easier to implement in scripting, because some types of downloads you won't get to see the actual URL from the DOM layer. To get started, install Yarn (unless you prefer a different package manager), create a new project folder, and install Puppeteer: mkdir image-extraction So that they can easily be selected, e.g. Further reading: how to submit forms with Puppeteer. Once you have a solid understanding of Puppeteer’s API and how it fits together in the Node.js ecosystem you can come up with custom solutions best suited for you. The dimensions of the first two images are 605 x 605 in pixels, but they appear smaller on the screen because they are placed in elements which restrict their size.Įach of the images has its extension for its id attribute, e.g., There are many ways you can download files with Puppeteer. To make things concrete, I’ll mostly be extracting the Intoli logo rendered as a PNG, JPG, and SVG from this very page.

I will use Puppeteer-a JavaScript browser automation framework that uses the DevTools Protocol API to drive a bundled version of Chromium-but you should be able to achieve similar results with other headless technologies, like Selenium. The techniques covered in this post are roughly split into those that execute JavaScript on the page and those that try to extract a cashed or in-memory version of the image. Whatever your motivation, there are plenty of options at your disposal. Maybe you just don’t want to put unnecessary strain on their servers by requesting the image multiple times. Perhaps the images you need are generated dynamically or you’re visiting a website which only serves images to logged-in users.

The simplest solution would be to extract the image URLs from the headless browser and then download them separately, but what if that’s not possible? const puppeteer = require('puppeteer') Īwait tViewport(.In this post, I will highlight a few ways to save images while scraping the web through a headless browser. It goes to a generic search in google and downloads the google image at the top left.

0 kommentar(er)

0 kommentar(er)